Analyzing HCA Data in Terra

Terra is a scalable cloud platform for biomedical research that offers the ability to use data, tools, and workflows to perform interactive analysis in the cloud.

In this tutorial, you will learn how to send search results from the HCA Data Explorer to Terra and how to run a basic workflow with that data.

Before starting

Before trying this tutorial, complete the steps below:

- Read the Accessing HCA Data and Metadata guide to learn how to find HCA data in the Data Explorer.

- Visit Terra Support to learn how to register for a Terra account.

- Download the Google Chrome browser which is recommended when using Terra.

For additional details about analyzing data in the cloud with Terra, see the Terra Support guides.

HCA data-related tutorial workspaces

In addition to running the tutorial below, registering for Terra allows you to access multiple tutorial workspaces dedicated to using HCA data as described below:

- Optimus workspace for processing 10x data with the Optimus Pipeline.

- Smart-seq2 workspace for processing Smart-seq2 data with the Smart-seq2 Multi-Sample Pipeline.

- Intro-to-HCA-data-on-Terra workspace for exporting HCA data and analyzing it with community tools like Seurat, Scanpy, Cumulus, and Pegasus.

Video demonstration of importing HCA data to a Terra workspace

Prior to trying the tutorial, you might want to watch this very brief video demonstration of importing HCA data into Terra and using the Intro-to-HCA-data-on-Terra workspace. This video is additionally available in this Terra blog post about the workspace.

Tutorial — Automated processing of HCA BAM files in Terra

This tutorial guides you through finding and setting up a simple example workflow in Terra that you can use to process BAM files exported from the HCA Data Explorer.

For an additional Terra tutorial on using community tools with HCA data, see the Intro-to-HCA-data-on-Terra workspace.

Step one: Finding BAM files with the HCA Data Explorer and exporting to Terra

You can use the HCA Data Explorer to find data to export to Terra. The Data Explorer lists projects with data available for download and lets you filter the data for a number of attributes.

Using the Data Explorer, select some data that you are interested in. Choose anything that looks interesting - we will be running a really simple workflow that generates MD5 checksums of files, so the type of data is not important.

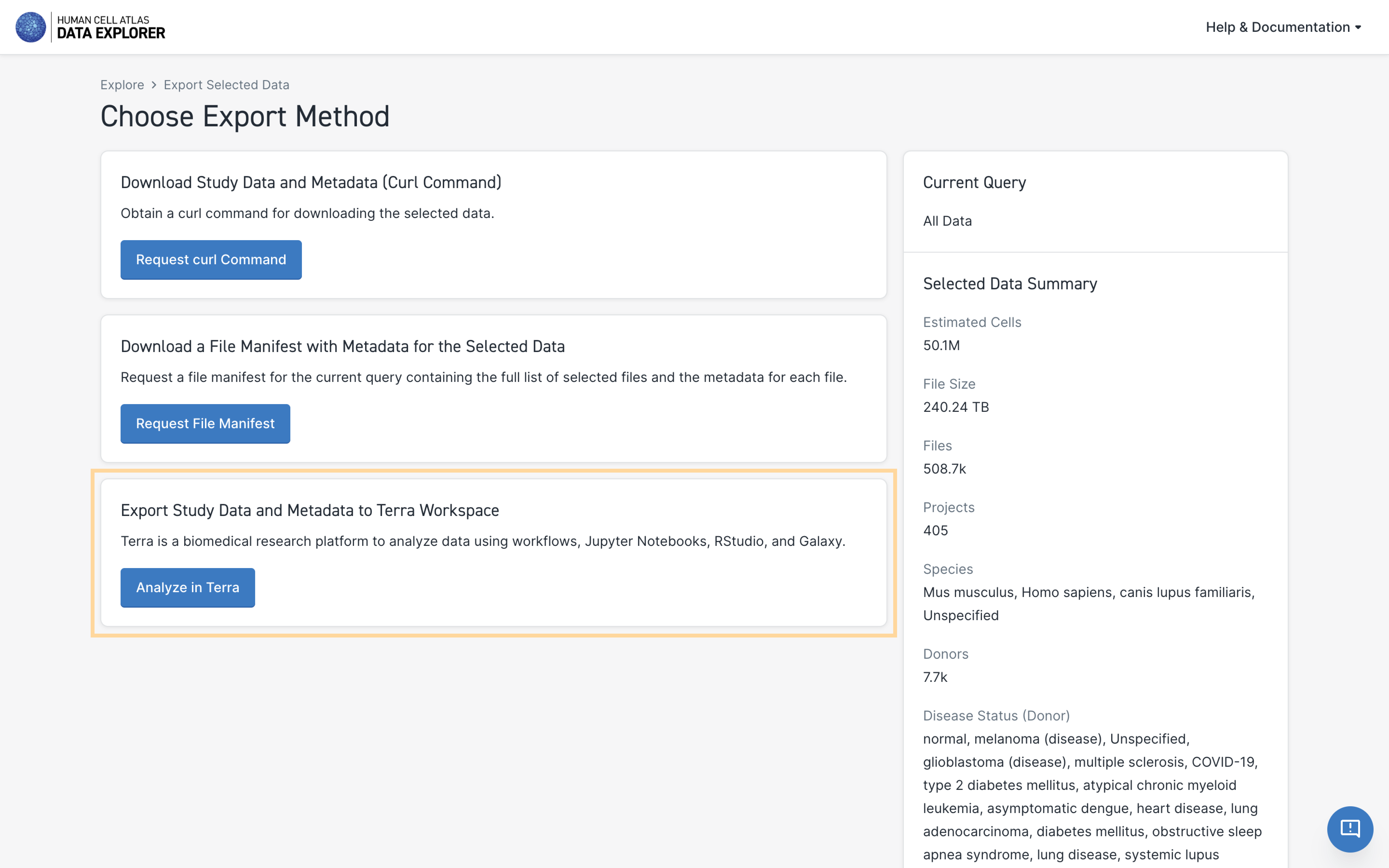

When you have found a data set of interest, use the following steps:

- Click on the big blue Export Selected Data icon at the top right of the page.

- Click on the Analyze in Terra icon at the bottom.

- Click on the species you want to analyze.

- Select the files you want to export by clicking the checkbox next to the file type.

- Select the download format.

- Click the Request Link icon.

You'll be redirected to a Data Portal page containing an export link. - Click Open Terra.

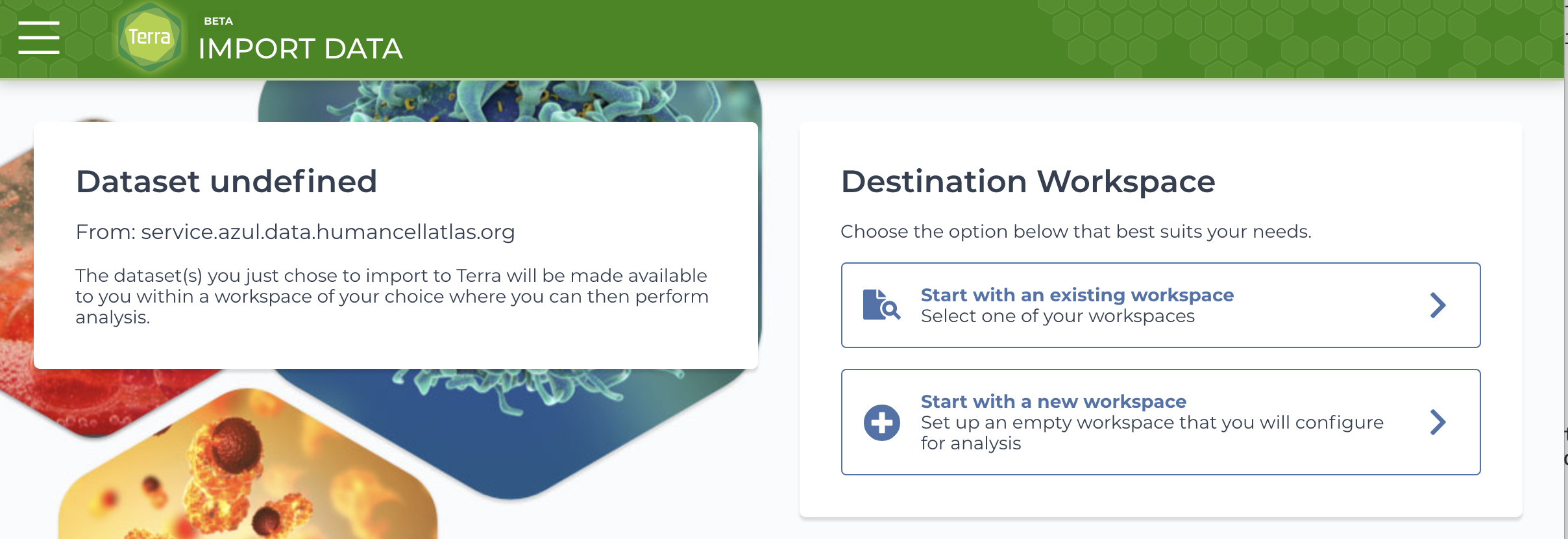

You'll be redirected to the Terra import page. - If you've already created a Terra workspace, select the workspace by choosing Start with an existing workspace. Otherwise, choose Start with a new workspace.

Step two: Finding a workflow in Dockstore

A workflow is simply a set of steps you want to use to transform or analyze your data, often written as a script in a language like Python or R. Terra integrates with scripts written in a particular workflow language called the Workflow Description Language (WDL).

We can find WDL workflows that use our analysis tools of interest from public repositories like Dockstore, a platform for bioscience tools and workflows that use Docker containers. For more information about how to use the Dockstore, see the Dockstore documentation.

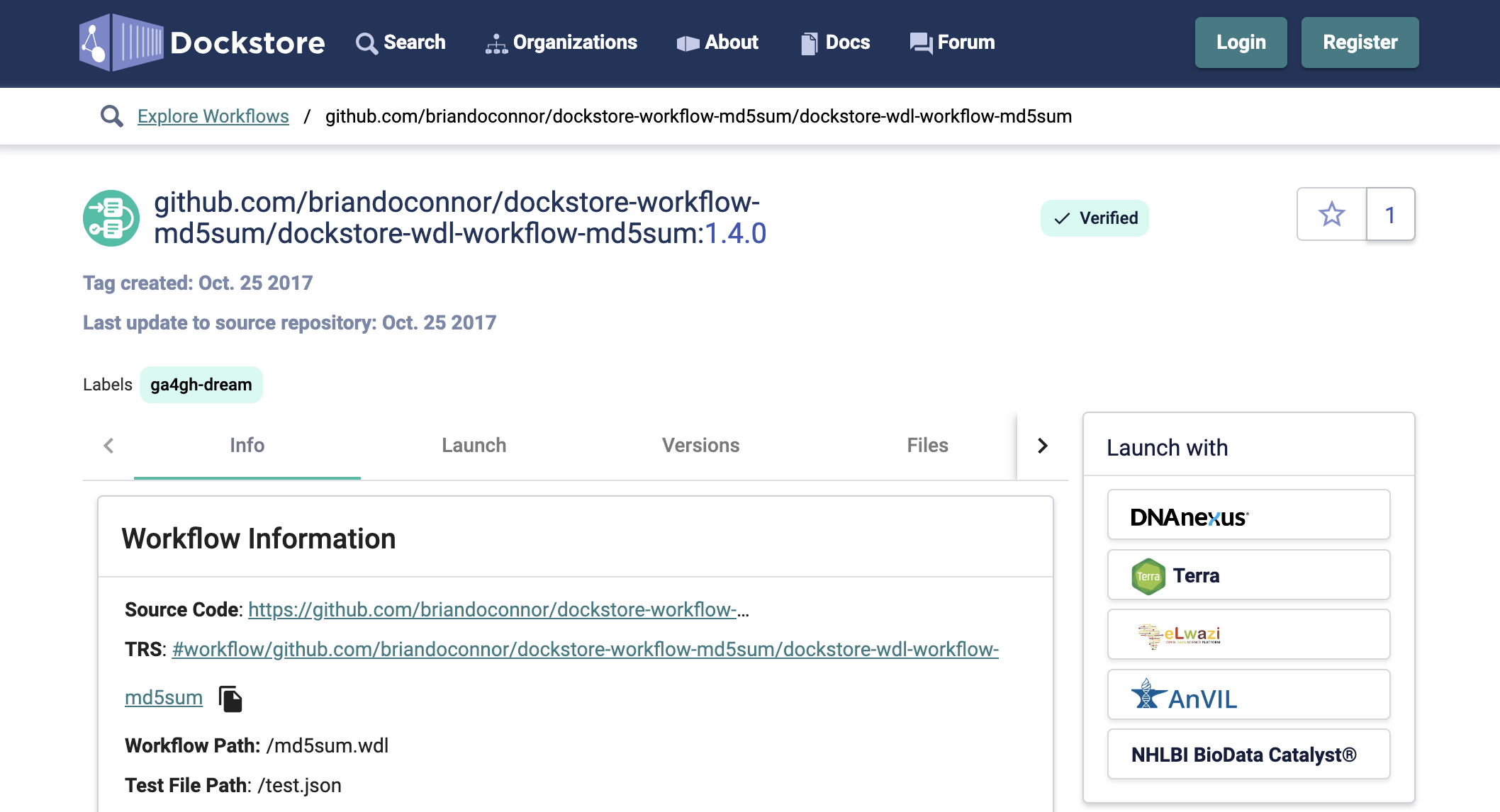

We'll use Dockstore in this tutorial to find a workflow to run on the data we've just exported. Specifically, we'll look for the dockstore-wdl-workflow-md5sum workflow, which generates an MD5 checksum for a given file (or files).

We will need to import this workflow from Dockstore using the following steps:

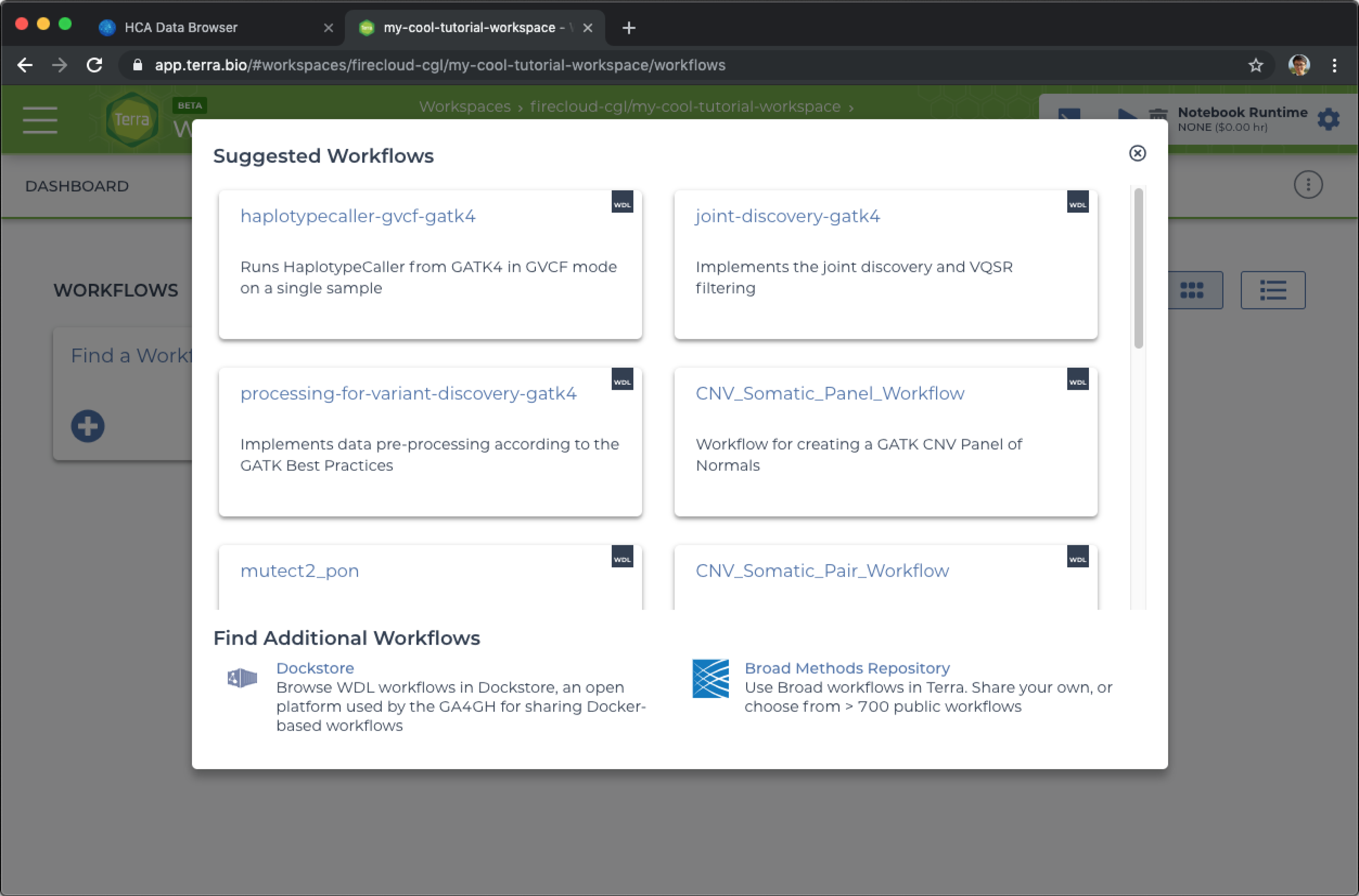

- Select the Workflows tab at the top of the workspace.

- Click the Find a Workflow card.

- Click on the Dockstore link at the bottom of the pop-up.

- Search for

md5sum.

The search box is on the left side of the page. Results should load instantly. Look for a workflow namedbriandoconnor/dockstore-workflow-md5sum/dockstore-wdl-workflow-md5sum. - Click on the workflow.

- Click on the Terra option in the Launch with box.

- Select the destination workspace (the same workspace to which you exported the HCA data) and Import the workflow.

You'll be redirected to the workflow setup page in Terra. Continue on to Step 3.

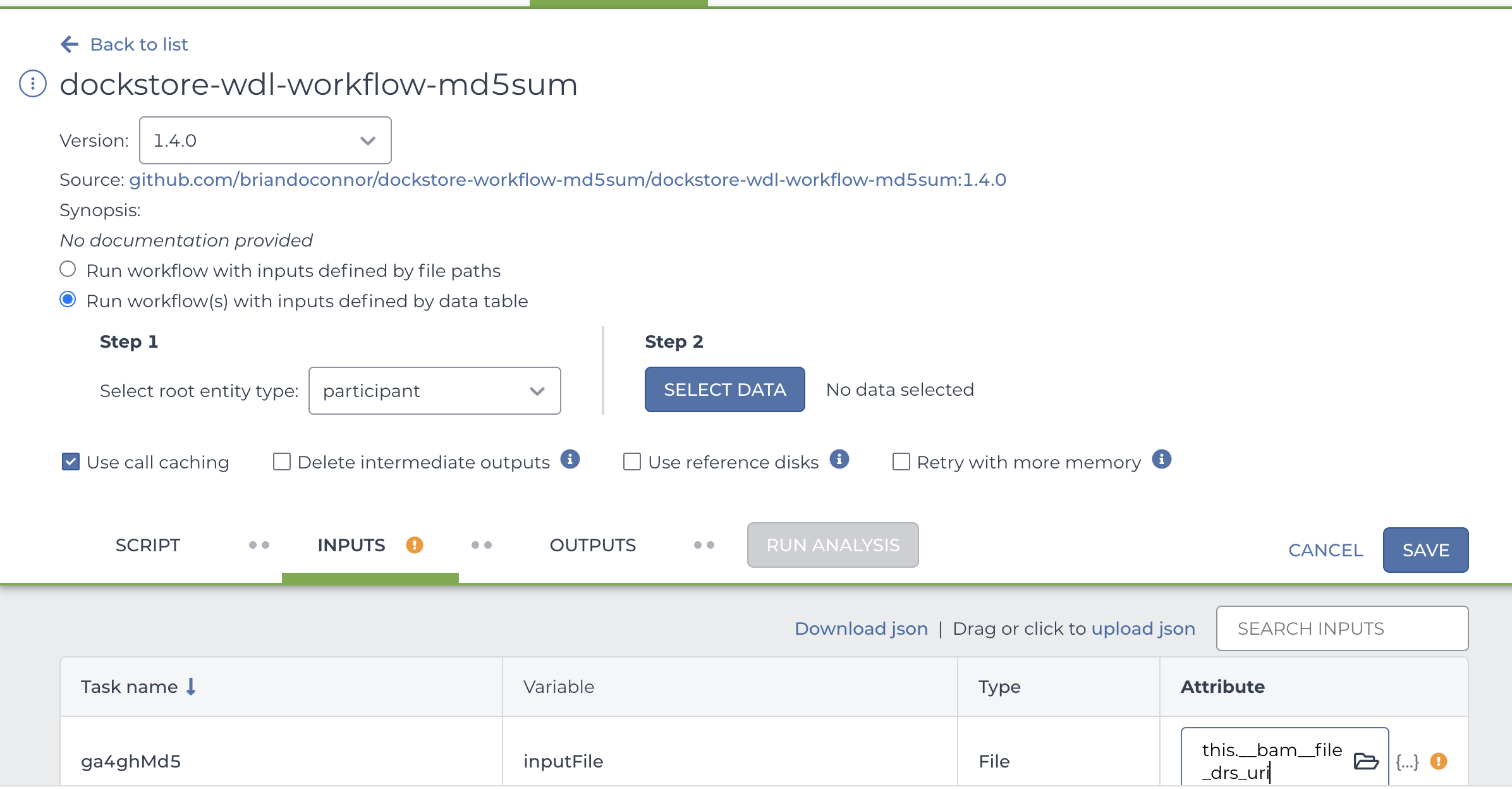

Step three: Setting up and running the workflow in Terra

To analyze the data we exported to Terra, we need to set up the workflow so that it reads from the workspace data table on the Data page.

Specifically, we want the MD5 checksum workflow to run a single BAM file from the data table.

To set up the workflow, do the following:

- Select the Run workflow(s) with inputs defined by data table radio button.

- In Step 1, select a data table as the root entity that contains the file you want to analyze.

- In Step 2, click the Select Data icon.

- Select Choose specific rows to process.

- Check the box left of the participant ID for the file you want to analyze.

- Select OK.

- From the Inputs section at the bottom of the workflow setup page, click on the attribute field for the inputFile variable.

- Select the DRS URL attribute (something like

this.__bam__file_drs_uri). - Click Save.

You will see the blue Run Analysis icon pop up. - Click Run Analysis.

- Confirm your input when prompted.

Terra's running the workflow now - walk away for a few minutes, grab a coffee, stretch. You deserve it.

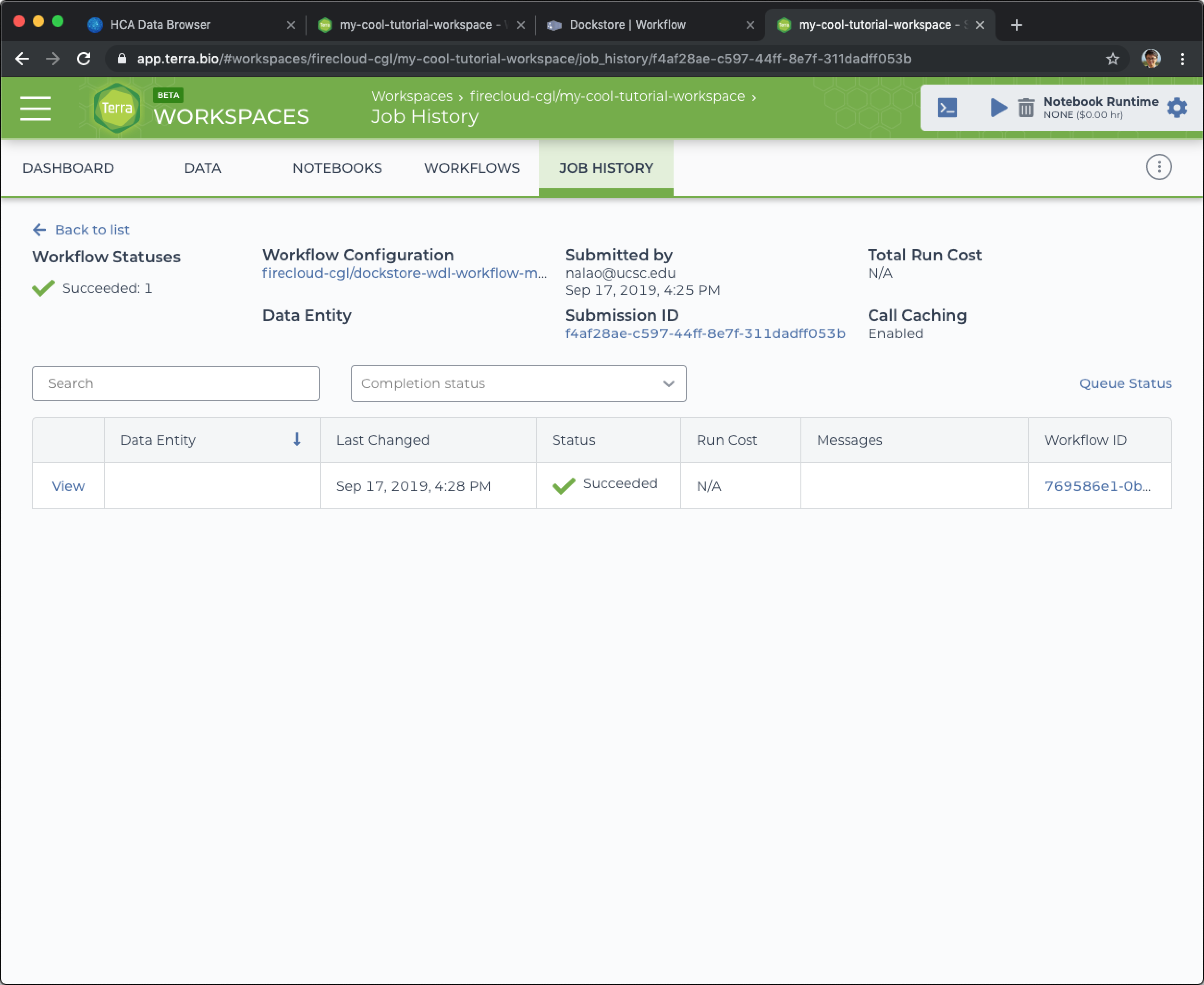

When you come back, refresh the page. Hopefully, your workflow will be done running.

After the workflow successfully runs, you'll see the green check next to workflow submission.

Congrats! If you want to see the results of this workflow execution, click on the Submission ID which will show the data generated by this workflow execution.

Next steps

Try one of the tutorial workspaces dedicated to HCA data and pipelines:

- Optimus workspace for processing 10x data with the Optimus Pipeline.

- Smart-seq2 workspace for processing Smart-seq2 data with the Smart-seq2 Multi-Sample Pipeline.

- Intro-to-HCA-data-on-Terra workspace for exporting HCA data and analyzing it with community tools like Seurat, Scanpy, Cumulus, and Pegasus.